The concept of Artificial Intelligence (AI)[1] is at the forefront of the news cycles, with ongoing discussions about its nature and boundaries. This may be credited to the success of the marketing surrounding the term ‘AI.’ The European Parliament’s Adopted AI Act [2] defines an AI system as proposed by the OECD as “a machine-based system that […] infers from the input it receives how to generate outputs such as predictions, content, recommendations, or decisions that can affect physical or virtual environments.” Human intelligence and AI do portray very unique features and capabilities. Human intelligence, based on neural performance biologically, characterizes consciousness, emotional comprehension, and creative thinking. It allows humans to learn from experiences, adapt to new situations, and negotiate complex social interactions[3]. Contrarily, AI is very strong at fast handling large volumes of data as well as pattern spotting and executing specific tasks effectively[4]. However, AI lacked the extent of the generality and emotional flavor of human intelligence. The strengths and the limits of intelligence, both human and artificial, make them complementary in many applications[5]. One possible human-defined objective lies within the military domain: to use AI to get the upper hand militarily. We will explore if, when, and how that might be possible.

Worldwide, many believe that integrating the potential benefits offered by machine learning in the military could enhance its efficiency. From algorithms assisting in recruitment to those designed for surveillance and those deployed directly in combat scenarios, AI applications have the potential to redefine the nature of warfare in the future. Nevertheless, the adoption of AI also entails notable risks.

Military Use of AI

Advancement in AI is so fast that every other day, the belief that AI will disrupt numerous industries, including the security domain, is strengthened. More than twenty-four countries[6] have revealed national strategies to leverage AI, with several states actively pursuing an aggressive agenda of AI integration into the military.

Artificial intelligence[7] appears to have multiple applications in military operations, including strategic decision-making processes, training, and actual military engagements. AI might accelerate quick and precise scenario analyses in strategic decision-making, enabling rational actions in urgent military situations. During training, it is hoped that AI might lead to personalized instruction, unbiased evaluations, merit-based promotions, realistic simulations, and exercises. In military operations, AI might boost the timely processing of data from various sources to aid decision-making, simplify logistics through optimization, and reinforce support systems.

For some experts, the militarization[8] of Artificial Intelligence isn’t inherently more worrying than the militarization of computers or electricity, a point of view many find naïve. Nonetheless, most experts argue that specific military applications of AI (lethal autonomous weapons and nuclear operations being the first two that come to mind) could pose significant risks. Furthermore, a more pressing prospect that needs our attention is the overall impact of the “intelligentization”[9] or “cognitization” of military operations, which many argue to have the potential to reshape the nature of warfare fundamentally.

War in the Cognitive Age

AI will bring a new depth to warfare by enhancing human cognition. Whether physical or digital, machines can perform tasks independently and powered by AI, albeit within specific limits.

No AI system or set of systems can ‘yet’ match human intelligence’s flexibility, resilience, and adaptability.[10] In the era of cognitive warfare, experts predict that AI will play a role alongside human intelligence. The most effective militaries will likely be those that combine AI and human cognition.

Many discussions[11] about using AI in the military focus on its downsides, understandably, but it can also help protect civilians, reduce casualties, and improve operational effectiveness. AI applications can classify data, detect anomalies, predict future behavior, and optimize tasks. All of these are extremely important performance boosters for military contexts. With enough data and well-defined tasks, AI systems can classify military objects, spot unusual behavior, forecast enemy actions, and improve military system performance.

AI will enable the fielding of autonomous vehicles that are smaller, stealthier, faster, more numerous, able to persist longer on the battlefield, [12] and take more significant risks. Swarming systems will be useful for different tasks such as reconnaissance, logistics, medical evacuation, resupply, offense, and defense.

The most impactful uses of AI will likely be in command and control.[13] With AI, militaries can operate faster, with more systems, and carry out complex and distributed operations. This will enhance battlefield awareness, command and control, speed, precision, and coordination. However, humans will still be essential in real-world combat due to machines’ limitations in handling unexpected situations, which are more complex than computer simulations.

While AI might seem like it would mainly boost offensive tactics, it’s also useful for defense. Since AI is versatile, its impact on offense and defense could vary depending on how it’s used, and this could change over time.

Another niche area for smart AI use is counterterrorism. AI might become a vital[14] tool for counter-terrorism operations, providing quick and accurate information to make informed decisions. By combining AI analysis with human input, we can better identify threats, discover patterns, track suspects, and respond to attacks while reducing the risk of harm to society.

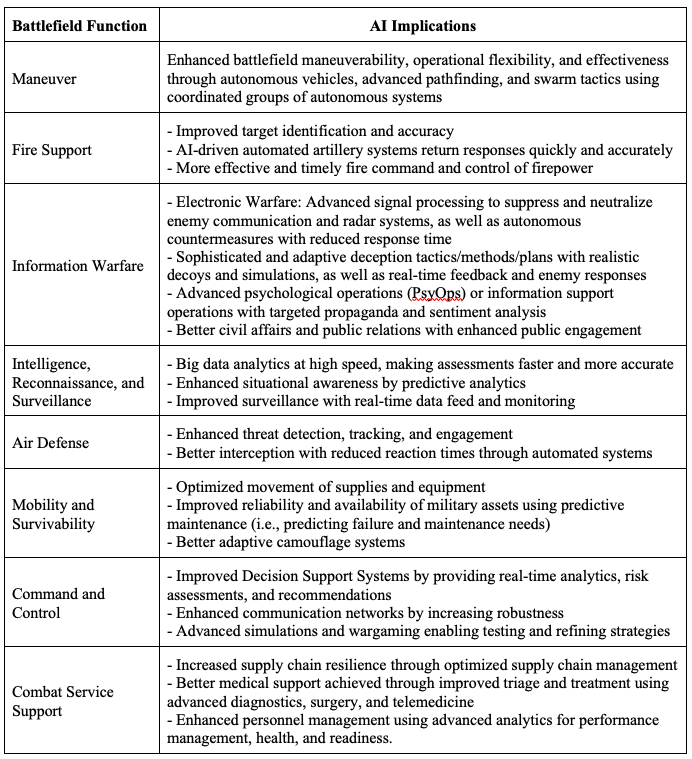

AI Implication on Battlefield Functions

It will also be critical to analyze the implications of AI on battlefield functions (BF), which refer to ongoing processes or activities necessary to complete missions or support essential tasks and facilitate task coordination, interaction between different types of military forces, and connections between various battlefield operating systems (BOSs). AI is predicted to significantly enhance BFs and facilitate more efficient support of friendly forces, better disruption of enemy capacity and capabilities, and more influential impact over enemy and public perceptions. Table 1 precisely summarizes the AI implications on the BFs. Table 1. Implications of AI on BFs

Potential Risks from Military AI Applications

While offering numerous benefits, AI also faces several limitations[15] and risks. First, AI still lags behind human intelligence, and its narrow focus can lead to subpar performance[16] in some scenarios. Failures can range from subtle biases to catastrophic accidents. Additionally, and more importantly, the complexity of advanced AI systems and their nature as ‘black boxes’ can make them less transparent, which might present challenges in predicting their interactions with the real world. Consequently, there’s a continual risk of accidents, and AI systems are vulnerable to cognitive attacks manipulating their exposures.

These limitations are crucial and pronounced in military settings, which are chaotic, unpredictable, and adversarial. Military AI systems will undoubtedly encounter challenges, experience accidents, and be intentionally manipulated by adversaries. Therefore, any evaluation of AI’s role in warfare[17] must address the significant fragility of AI systems and how it will impact their effectiveness in combat situations.

Accident Risk

Military AI systems need accurate wartime testing, but there’s a lack of data for this. Training simulations can help but don’t mimic real war’s chaos and violence. This gap could mean AI works well in training but fails in actual combat, leading to accidents or ineffective military systems. These accidents could cause serious harm, like accidental conflict escalation or civilian casualties. Also, hacking vulnerabilities could destabilize crises and escalate tensions between countries.

Autonomy and Pre-delegated Authority

Even if AI systems work perfectly, there’s a challenge: predicting how they’ll act in a crisis. When we use autonomous systems, we give them authority for specific actions. But leaders might change their minds during emergencies. In the Cuban Missile Crisis,[18] US leaders planned to attack if a US plane was shot down over Cuba. But when it happened, they changed their plan. This is projection bias – humans struggle to predict their future preferences. So, even if autonomous systems follow their programming, they might not act as human leaders want, raising the risk of crises or conflicts escalating.

Prediction and Trust in Automation

Relying on humans to oversee AI systems and limiting AI to advisory roles doesn’t solve all the risks. People often trust machines too much, a problem called automation bias.[19]

Trusting machines too much can cause accidents and mistakes, even before a war starts. Prediction algorithms rely on the data they’re trained on, but there’s not enough data for rare events like surprise attacks. If the data is flawed, the analysis will be wrong. AI’s “black box” nature, where its inner workings are hidden, can hide these issues.

Nuclear Stability Risks

The dangers of AI and automation are especially critical regarding nuclear weapons. Accidents, changes in authority, or relying too much on automation could lead to severe consequences. In the past,[20] there have been false alarms in nuclear early warning systems. The complexity of AI systems might make human operators trust them too much. Using AI or automation in other parts of nuclear operations could also be risky. For example, accidents with nuclear-armed drones could lead to losing control of the nuclear payload or mistakenly signaling escalation to an enemy.

Competitive Dynamics and Security Dilemmas

Commercial innovation drives AI, which militaries adopt for defense. Yet, this race could lead to less security overall. Speed and safety are key risks. Automation may sideline humans for speed in military systems, risking global cyber conflicts and accidents. Safety is crucial; sacrificing it for competition could make all nations less secure. Military control by humans remains vital.

Mitigating Potential Risks

Unlike discrete technologies like missiles, AI is a versatile tool similar to computers or engines. While concerns of an “AI arms race”[21] may be exaggerated, real risks exist. Despite leaders’ rhetoric, military spending on AI remains relatively low. Instead of an actual arms race, military adoption of AI resembles the ongoing trend of embracing new technologies. However, integrating AI into national security and warfare carries genuine risks.[22] Acknowledging these risks isn’t sufficient; actionable steps are needed to minimize the dangers of military AI competition.

Arms control is one way to reduce AI risks, but states can also take unilateral steps. Confidence-building measures[23] are a potential tool to lessen military AI competition risks. These measures have various pros and cons. Scholars and policymakers should consider confidence-building measures and other approaches like traditional arms control as they study these risks.

Conclusions

To conclude, it is crucial to note that AI is a versatile technology with numerous potential military uses, as discussed in this article. Despite its potential benefits, AI use brings forth ethical concerns and risks, particularly with lethal autonomous weapon systems (LAWS). Furthermore, it carries risks related to changing the nature of warfare, current limitations in AI technology, and its application in missions like nuclear operations.

Policymakers must acknowledge these risks as nations adopt AI into their military forces and work to mitigate them. It’s unrealistic to expect militaries to abstain from AI adoption, akin to avoiding computers or electricity. However, how AI is integrated is crucial, with various approaches available to manage risks from military AI competition.

Establishing a legal framework with clear definitions and ethical principles, ensuring human control and oversight over AI systems, and prioritizing cybersecurity and AI trustworthiness are essential. Allocating legal responsibilities under such a framework is necessary, with ongoing debates shaping the scope of AI systems, particularly in the context of the EU’s draft AI Act. The EU should lead in developing and promoting this framework globally, addressing fundamental questions about human dignity, rights, proportionality, and accountability.

[1] Press Release: Mind the Gap in Standardisation of Cybersecurity for Artificial Intelligence, April 27, 2023 https://www.enisa.europa.eu/news/mind-the-gap-in-standardisation-of-cybersecurity-for-artificial-intelligence

[2] European Parliament 2019-2024, TEXTS ADOPTED P9_TA(2024)0138 Artificial Intelligence Act https://www.europarl.europa.eu/doceo/document/TA-9-2024-0138_EN.pdf

[3] Goleman, D. (1995). Emotional Intelligence: Why It Can Matter More Than IQ. Bantam Books.

[4] Russell, S., & Norvig, P. (2016). Artificial Intelligence: A Modern Approach. Pearson.

[5] Marr, B. (2018). The Key Definitions Of Artificial Intelligence (AI) That Explain Its Importance, Feb.14, 2018 https://www.forbes.com/sites/bernardmarr/2018/02/14/the-key-definitions-of-artificial-intelligence-ai-that-explain-its-importance/

[6] Tim Dutton, An Overview of National AI Strategies, June 28, 2018 https://medium.com/politics-ai/an-overview-of-national-ai-strategies-2a70ec6edfd

[7] Eray Eliaçık, Guns, and Codes: The era of AI-wars begins, August 17, 2022 https://dataconomy.com/2022/08/17/how-is-artificial-intelligence-used-in-the-military/#Future_of_artificial_intelligence_in_military

[8] Militarization-Artificial Intelligence, https://digitallibrary.un.org/record/3972613?ln=en&v=pdf

[9] Elsa B. Kania, Battlefield Singularity: Artificial Intelligence, Military Revolution, and China’s Future Military Power, November 28, 2017 https://www.cnas.org/publications/reports/battlefield-singularity-artificial-intelligence-military-revolution-and-chinas-future-military-power

[10] Militarization-Artificial Intelligence, https://digitallibrary.un.org/record/3972613?ln=en&v=pdf

[11] Daphné Richemond-Barak, Beyond killer robots: How AI impacts security, military affairs, September 30, 2022, https://www.c4isrnet.com/unmanned/robotics/2022/09/30/beyond-killer-robots-how-ai-impacts-security-military-affairs/

[12] Paul Scharre, Robotics on the Battlefield, Part II: The Coming Swarm, Center for a New American Security, October 15, 2014, https://www.cnas.org/publications/reports/robotics-on-the-battlefield-part-ii-the-coming-swarm

[13] Militarization-Artificial Intelligence, https://digitallibrary.un.org/record/3972613?ln=en&v=pdf

[14] Raquel Velasco Ceballos, AI in Military Affairs: Its Role in the Decision-Making Process Towards a Counter-Terrorism Operation, November 2022, Finabel, https://finabel.org/wp-content/uploads/2022/11/Copy-of-New-InfoFlash-Template.Sample.pdf

[15] Paul Scharre, Military Applications of Artificial Intelligence: Potential Risks to International Peace and Security, Center for a New American Security, July 2019, https://stanleycenter.org/wp-content/uploads/2020/05/MilitaryApplicationsofArtificialIntelligence-US.pdf

[16] Paul Scharre, Military Applications of Artificial Intelligence: Potential Risks to International Peace and Security, Center for a New American Security, July 2019, https://stanleycenter.org/wp-content/uploads/2020/05/MilitaryApplicationsofArtificialIntelligence-US.pdf

[17] Paul Scharre, Military Applications of Artificial Intelligence: Potential Risks to International Peace and Security, Center for a New American Security, July 2019, https://stanleycenter.org/wp-content/uploads/2020/05/MilitaryApplicationsofArtificialIntelligence-US.pdf

[18] Militarization-Artificial Intelligence, https://digitallibrary.un.org/record/3972613?ln=en&v=pdf

[19] Kate Goddard, Abdul Roudsari and Jeremy C Wyat, Automation bias: a systematic review of frequency, effect mediators, and mitigators; J Am Med Inform Assoc. 2012 Jan-Feb; 19(1): 121–127 Published online June 16, 2011. doi: 10.1136/amiajnl-2011-000089 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3240751/

[20] Patricia Lewis et al., Too Close for Comfort: Cases of Near Nuclear Use and Options for Policy, Royal Institute of International Affairs, London, April 2014, https://www.chathamhouse.org/2014/04/too-close-comfort-cases-near-nuclear-use-and-options-policy

[21] Michael Horowitz and Paul Scharre, AI and International Stability: Risks and Confidence-Building Measures, January 12, 2021 https://www.cnas.org/publications/reports/ai-and-international-stability-risks-and-confidence-building-measures

[22] Michael Horowitz and Paul Scharre, AI and International Stability: Risks and Confidence-Building Measures, January 12, 2021 https://www.cnas.org/publications/reports/ai-and-international-stability-risks-and-confidence-building-measures

[23] Michael Horowitz and Paul Scharre, AI and International Stability: Risks and Confidence-Building Measures, January 12, 2021 https://www.cnas.org/publications/reports/ai-and-international-stability-risks-and-confidence-building-measures